How Ambient delivers proactive security using machine learning and Modelbit

How Ambient delivers proactive security using machine learning and Modelbit

by

Daniel Mewes

,

Staff Software Engineer

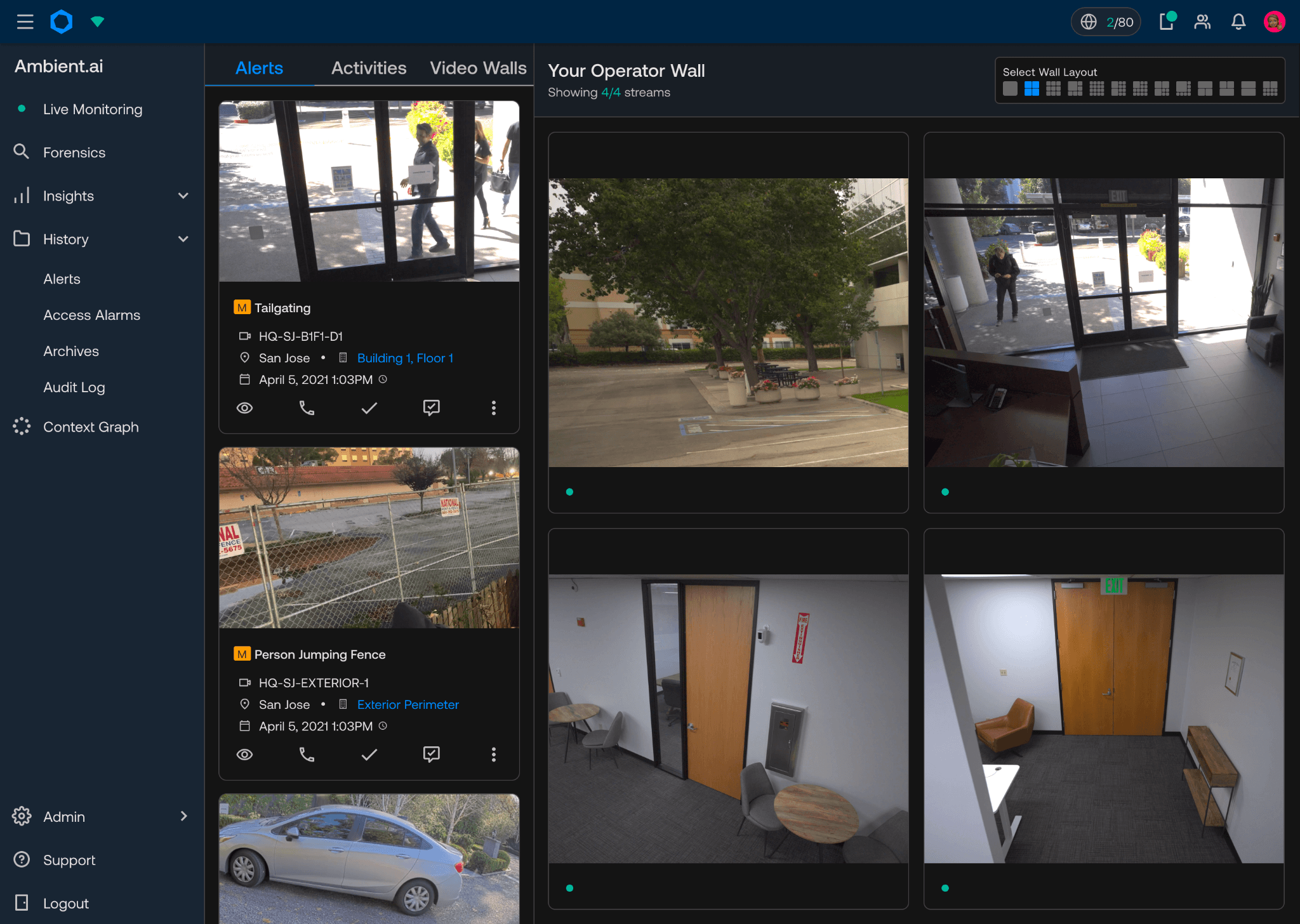

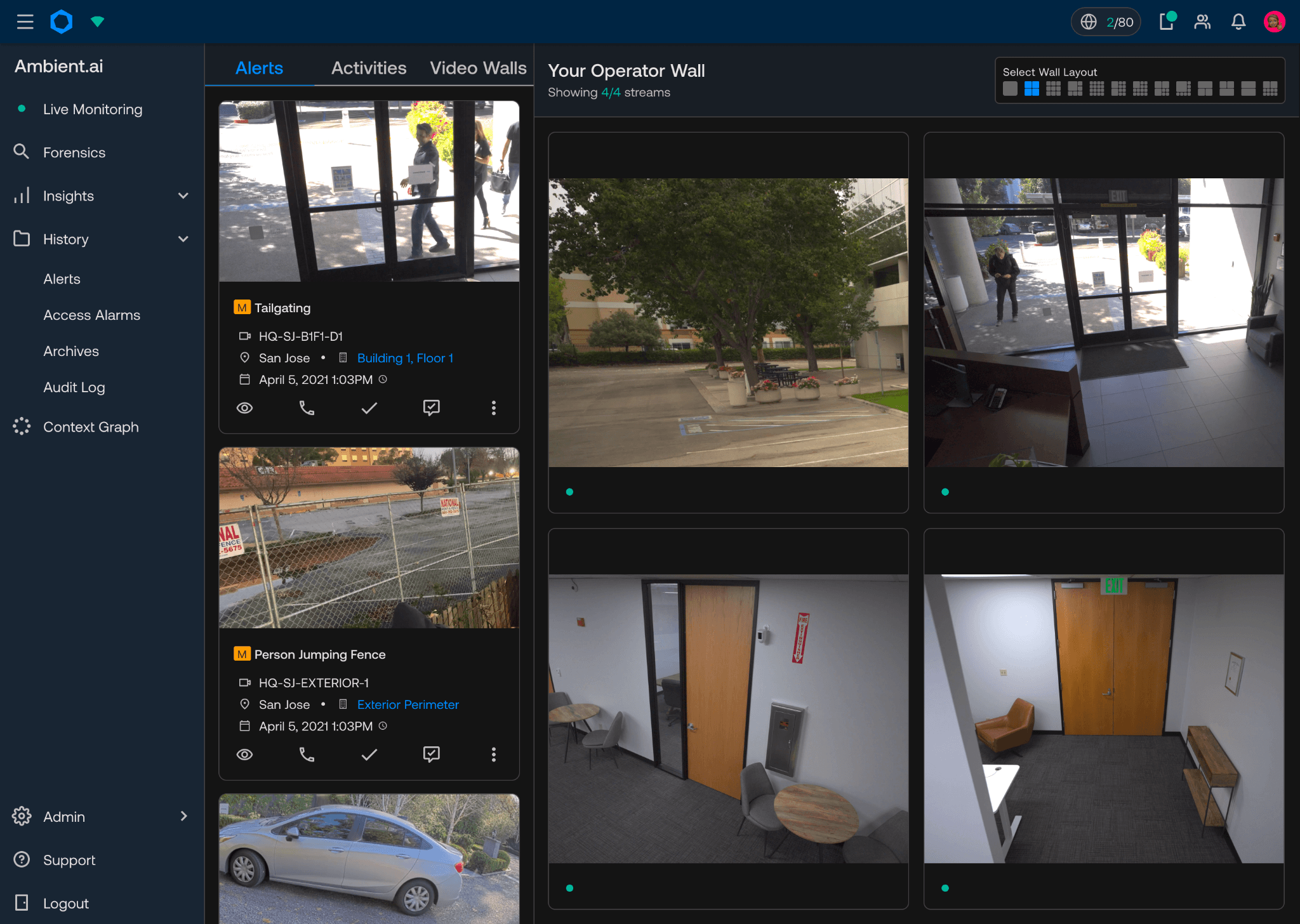

At Ambient, we use machine learning to offer proactive security capabilities to our customers. We not only detect a wide range of complex activities in a security camera video stream, but our software is also able to understand the context in which these activities take place. This allows us to distinguish normal activity from threats. It avoids alerting fatigue for our customers without missing anything important.

Our baseline functionality is the detection of safety-critical events such as weapon brandishing, perimeter security, or medical emergencies. Beyond that, our museum customers want to know if anyone is touching the priceless artwork. Our corporate customers need to know if an unauthorized person is “tailgating” into an office without a badge.

We complement this proactive detection with some of the most advanced video search functionality on the market. Our product can answer ad-hoc queries like “Show me the first video frame where this suspicious car appears”, searching through terabytes of video footage and returning results in under a second.

All of this functionality is powered by machine learning models that we train and develop in-house.

Fast iteration is key

Recently, we have seen some of the highest rate of new developments and advancements in computer vision to date. Just as with the rapid pace at which large language models have developed, we are now seeing new breakthroughs in computer vision on a weekly basis. Recent research has focussed on multi-modal foundation models, which combine text and image understanding. Such large, self-supervised models have been shown to provide a much deeper and more universal understanding of image data than ever before.

At Ambient.ai, we’re leveraging these innovations to deliver groundbreaking new functionality to our customers. However, not all new developments are practical to deploy in production as is. It turns out that footage from security cameras looks quite different from the kinds of online imagery and video data that many off-the-shelf models are trained on. Not every new technology performs equally well when applied to our use case. Oftentimes, models need to be modified and/or fine-tuned to suit our needs.

With Modelbit, we’ve been able to experiment with new models faster than ever before. It frequently takes us less than an hour to have a brand new experimental model up and running on Modelbit, where it is ready to be integrated into a full-stack prototype or shadow deployment. Once a model is up, we’re able to make iterations in a manner of minutes. It feels a bit like magic every time I make a change to our model code, push it to Github, and see it live on Modelbit just seconds later.

Supporting a hybrid architecture

For many of our customers, Ambient monitors thousands of security camera video streams 24x7. When it comes to alerting about a critical security threat, every second counts. Our computer vision models need to run continuously and at low latency. Secondly, privacy is extremely important for us. In fact we’ve designed our entire architecture to guarantee that sensitive security camera footage does not end up in the wrong hands. To address both of these requirements, raw video footage is processed and stored entirely on-prem, within our customers’ own networks.

However, the most powerful computer vision models available today require large amounts of compute. Thanks to our proprietary technology, we are able to combine the benefits of on-prem storage and processing, with the unique capabilities of running large state of the art computer vision models in the cloud. We are able to separate the processing and storage of raw video from the additional contextualization that happens down the line. And Modelbit has been an ideal platform for hosting the latter. As our load increases, Modelbit automatically scales up our model deployments to add more compute resources as needed, maintaining a low latency envelope at all times.

Magical, AI-powered product features

The goal of our object detection models is to give our customers’ high-level superpowers when interacting with video feed from their security footage. For example, customers may be watching video and notice that a car is behaving suspiciously. Once they know it’s a suspicious car, they’ll want to know the first time the car appeared on camera. Or if there’s a highly valuable object in the wrong place, they’ll want to know the last time that object interacted with a human who probably moved it.

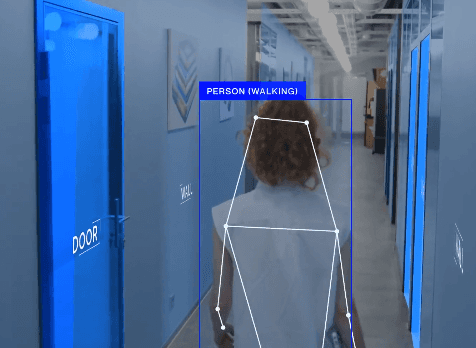

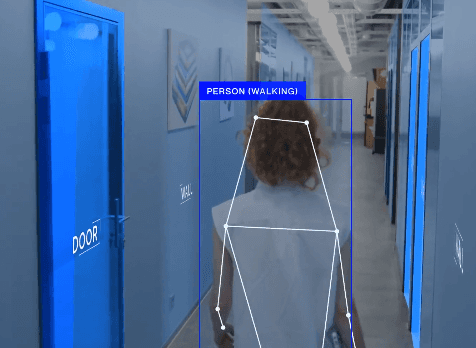

Ambient’s product interface allows our customers to simply click on an object, automatically identify its type, and then ask all those questions and more. When users interactively click an object in a video feed, we invoke a segmentation model to obtain the mask of the object. Then, an image classification model determines the object class and the relevant types of interactions that the user can then search for.

Real-time threat detection

We proactively alert our customers when alarming scenarios are playing out on their security video feed. These are typically more subtle than merely detecting an object: A knife lying on a table in the kitchen is fine, but a person brandishing a knife at others is not. The context and temporal sequence of events is crucial! Large multi-modal models that combine vision encodings with a large language model allow us to rapidly iterate on our detection capabilities for complex, security-relevant scenarios. We’re even able to tailor detection patterns to each customer’s individual needs.

Today, we’ve already deployed real-time capable multi-modal models within our on-premise appliances. Those are then complemented by even larger models running in the cloud on Modelbit.

Training and Deploying our Models

At Ambient, our experienced machine perception team researches and trains all of these models. This team typically works in interactive notebook-based environments like Jupyter notebooks and Google Colab. These interactive environments help them quickly evaluate different model types and combinations of models against the training data.

However, getting large, complex pipelines of models into production where they can run fast and scalably is a challenge. In addition, many of these models need to run in exactly the same Python environment with the same versions of Python and Python packages that were used in training them. They may also have specific hardware requirements like certain GPUs or system architectures.

Modelbit allows the machine perception team to quickly run “modelbit.deploy()” from their notebooks where the models are trained and evaluated.

Managing the models at scale

At Ambient, we use GitHub to manage our production codebase and perform code reviews. This applies to research scientists on the machine perception team, as well as the software engineers on our ML Infra and platform teams.

We’ve found that Modelbit integrates perfectly with this workflow. We use git branches with Modelbit to deploy models into staging environments until they’re ready to go to production. This isolates them from production and allows them to have dedicated staging environments they use to do their work in a production-like setting.

Since Modelbit is backed by our GitHub repo, when a model is ready for production, its author will send a Pull Request to merge it to the “main” branch. A second engineer will review the PR and, if it’s ready, approve it. Merging a branch to “main” immediately kicks off the deployment of the model to production.

We also make good use of Modelbit’s Endpoints feature to manage load on models. Just because a model is deployed to production doesn’t mean we want it to get full production load right away. Every previous deployment version is maintained automatically in Modelbit, and we can toggle load between earlier and later versions at will. Typically, after deploying via the GitHub PR, we will shadow deploy the model for a period of time to ensure it’s working properly. Then we’ll A/B test the version with its previous version, before fully committing to the new version. We maintain these shadow deployments and A/B tests on both staging and production. If there’s ever an issue with a fully deployed model, we can always roll back.

Business impacts

Nothing we develop at Ambient has any value if we can’t deliver it to our customers. The work of our machine perception team combined with the flexible and rapid deployment using Modelbit allows us to deliver differentiated machine learning-based features to our customers. Our ability to quickly develop, evaluate and deploy cutting edge AI technologies is how we’ve established a winning position in the market, and how we continue to differentiate relative to our competitors.

Modelbit’s fast and flexible approach allows us to scale up rapidly as we bring new models online, and easily try out new architectures or new combinations of models. The fact that we can do this safely, with the platform engineering team overseeing production environments while machine perception researchers deploy their models, gives us an agility edge that is unmatched in our market.

At Ambient, we use machine learning to offer proactive security capabilities to our customers. We not only detect a wide range of complex activities in a security camera video stream, but our software is also able to understand the context in which these activities take place. This allows us to distinguish normal activity from threats. It avoids alerting fatigue for our customers without missing anything important.

Our baseline functionality is the detection of safety-critical events such as weapon brandishing, perimeter security, or medical emergencies. Beyond that, our museum customers want to know if anyone is touching the priceless artwork. Our corporate customers need to know if an unauthorized person is “tailgating” into an office without a badge.

We complement this proactive detection with some of the most advanced video search functionality on the market. Our product can answer ad-hoc queries like “Show me the first video frame where this suspicious car appears”, searching through terabytes of video footage and returning results in under a second.

All of this functionality is powered by machine learning models that we train and develop in-house.

Fast iteration is key

Recently, we have seen some of the highest rate of new developments and advancements in computer vision to date. Just as with the rapid pace at which large language models have developed, we are now seeing new breakthroughs in computer vision on a weekly basis. Recent research has focussed on multi-modal foundation models, which combine text and image understanding. Such large, self-supervised models have been shown to provide a much deeper and more universal understanding of image data than ever before.

At Ambient.ai, we’re leveraging these innovations to deliver groundbreaking new functionality to our customers. However, not all new developments are practical to deploy in production as is. It turns out that footage from security cameras looks quite different from the kinds of online imagery and video data that many off-the-shelf models are trained on. Not every new technology performs equally well when applied to our use case. Oftentimes, models need to be modified and/or fine-tuned to suit our needs.

With Modelbit, we’ve been able to experiment with new models faster than ever before. It frequently takes us less than an hour to have a brand new experimental model up and running on Modelbit, where it is ready to be integrated into a full-stack prototype or shadow deployment. Once a model is up, we’re able to make iterations in a manner of minutes. It feels a bit like magic every time I make a change to our model code, push it to Github, and see it live on Modelbit just seconds later.

Supporting a hybrid architecture

For many of our customers, Ambient monitors thousands of security camera video streams 24x7. When it comes to alerting about a critical security threat, every second counts. Our computer vision models need to run continuously and at low latency. Secondly, privacy is extremely important for us. In fact we’ve designed our entire architecture to guarantee that sensitive security camera footage does not end up in the wrong hands. To address both of these requirements, raw video footage is processed and stored entirely on-prem, within our customers’ own networks.

However, the most powerful computer vision models available today require large amounts of compute. Thanks to our proprietary technology, we are able to combine the benefits of on-prem storage and processing, with the unique capabilities of running large state of the art computer vision models in the cloud. We are able to separate the processing and storage of raw video from the additional contextualization that happens down the line. And Modelbit has been an ideal platform for hosting the latter. As our load increases, Modelbit automatically scales up our model deployments to add more compute resources as needed, maintaining a low latency envelope at all times.

Magical, AI-powered product features

The goal of our object detection models is to give our customers’ high-level superpowers when interacting with video feed from their security footage. For example, customers may be watching video and notice that a car is behaving suspiciously. Once they know it’s a suspicious car, they’ll want to know the first time the car appeared on camera. Or if there’s a highly valuable object in the wrong place, they’ll want to know the last time that object interacted with a human who probably moved it.

Ambient’s product interface allows our customers to simply click on an object, automatically identify its type, and then ask all those questions and more. When users interactively click an object in a video feed, we invoke a segmentation model to obtain the mask of the object. Then, an image classification model determines the object class and the relevant types of interactions that the user can then search for.

Real-time threat detection

We proactively alert our customers when alarming scenarios are playing out on their security video feed. These are typically more subtle than merely detecting an object: A knife lying on a table in the kitchen is fine, but a person brandishing a knife at others is not. The context and temporal sequence of events is crucial! Large multi-modal models that combine vision encodings with a large language model allow us to rapidly iterate on our detection capabilities for complex, security-relevant scenarios. We’re even able to tailor detection patterns to each customer’s individual needs.

Today, we’ve already deployed real-time capable multi-modal models within our on-premise appliances. Those are then complemented by even larger models running in the cloud on Modelbit.

Training and Deploying our Models

At Ambient, our experienced machine perception team researches and trains all of these models. This team typically works in interactive notebook-based environments like Jupyter notebooks and Google Colab. These interactive environments help them quickly evaluate different model types and combinations of models against the training data.

However, getting large, complex pipelines of models into production where they can run fast and scalably is a challenge. In addition, many of these models need to run in exactly the same Python environment with the same versions of Python and Python packages that were used in training them. They may also have specific hardware requirements like certain GPUs or system architectures.

Modelbit allows the machine perception team to quickly run “modelbit.deploy()” from their notebooks where the models are trained and evaluated.

Managing the models at scale

At Ambient, we use GitHub to manage our production codebase and perform code reviews. This applies to research scientists on the machine perception team, as well as the software engineers on our ML Infra and platform teams.

We’ve found that Modelbit integrates perfectly with this workflow. We use git branches with Modelbit to deploy models into staging environments until they’re ready to go to production. This isolates them from production and allows them to have dedicated staging environments they use to do their work in a production-like setting.

Since Modelbit is backed by our GitHub repo, when a model is ready for production, its author will send a Pull Request to merge it to the “main” branch. A second engineer will review the PR and, if it’s ready, approve it. Merging a branch to “main” immediately kicks off the deployment of the model to production.

We also make good use of Modelbit’s Endpoints feature to manage load on models. Just because a model is deployed to production doesn’t mean we want it to get full production load right away. Every previous deployment version is maintained automatically in Modelbit, and we can toggle load between earlier and later versions at will. Typically, after deploying via the GitHub PR, we will shadow deploy the model for a period of time to ensure it’s working properly. Then we’ll A/B test the version with its previous version, before fully committing to the new version. We maintain these shadow deployments and A/B tests on both staging and production. If there’s ever an issue with a fully deployed model, we can always roll back.

Business impacts

Nothing we develop at Ambient has any value if we can’t deliver it to our customers. The work of our machine perception team combined with the flexible and rapid deployment using Modelbit allows us to deliver differentiated machine learning-based features to our customers. Our ability to quickly develop, evaluate and deploy cutting edge AI technologies is how we’ve established a winning position in the market, and how we continue to differentiate relative to our competitors.

Modelbit’s fast and flexible approach allows us to scale up rapidly as we bring new models online, and easily try out new architectures or new combinations of models. The fact that we can do this safely, with the platform engineering team overseeing production environments while machine perception researchers deploy their models, gives us an agility edge that is unmatched in our market.